This thesis-driven research, completed in 2025 at Vaasa University of Applied Sciences (VAMK), investigates how a layered and proactive defence strategy can enhance web server security using common open-source tools.

Why Web Server Security Matters

The security of web servers is often underestimated—until it’s too late. As cybercrime becomes increasingly commercialized—highlighted by trends like Cybercrime-as-a-Service—the need for hardened systems is more urgent than ever. The thesis aimed to bridge the gap between theoretical best practices and practical, reproducible methods. It focused particularly on reverse proxies, SSL/TLS protocols (Secure Sockets Layer/Transport Layer Security), and firewall configurations within a testbed mimicking real-world threats.

What Was Researched – and How?

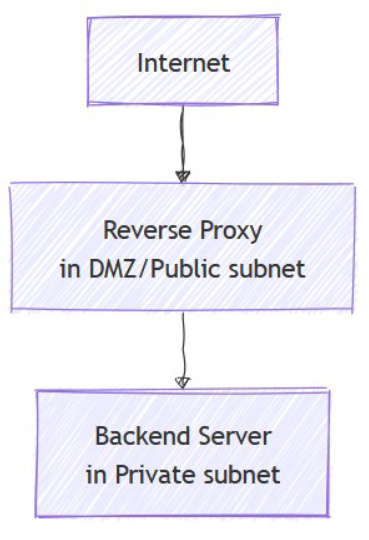

The study’s central aim was to examine how to optimally configure a secure reverse proxy and test the web server’s defenses using ethical hacking tools (Image 1.). A reverse proxy is a server that sits in front of one or more web servers and forwards client requests to them. It improves security by hiding backend systems from direct internet exposure. Three key research questions guided the thesis: How can the Nginx web server be optimally configured as a secure reverse proxy? What kind of penetration testing can effectively validate the setup? And what best practices can help mitigate real-world threats like man-in-the-middle (MITM) attacks or denial-of-service (DoS) floods?

Conducted in a virtual lab environment, the research was designed to reflect real-world server deployment scenarios. This test network included three virtual machines: a Nginx reverse proxy, a Virtuoso backend server, and a Kali Linux system used for client access and penetration testing. The backend server is the system that processes and stores data. In this case, Virtuoso was used—an open-source database server that handles complex queries and data integration. Kali Linux is a specialized operating system used by cybersecurity professionals to perform security testing and simulate attacks.

While the setup was local and controlled, it followed industry-relevant configurations like deploying the reverse proxy in a demilitarized zone (DMZ), applying firewall rules, custom SSL certificates, and simulating traffic over HTTPS (Hypertext Transfer Protocol Secure). A DMZ is a network area that separates public-facing servers from internal systems, reducing the risk to critical data in the event of a breach.

Key Strategies and Results

TLS Hardening and Certificate Management

TLS was a cornerstone of the security framework. TLS 1.2 and 1.3 were enforced, and only strong cipher suites were allowed. Certificates were signed by a local CA (Certificate Authority), and browser-side trust was manually configured, ensuring encrypted and authenticated communication. A Certificate Authority is a trusted entity that issues digital certificates to confirm a server or website’s identity, allowing secure connections through encryption.

Reverse Proxy Configuration

Nginx was configured not just to forward traffic, but to protect it. HTTP headers were hardened, rate-limiting was implemented to mitigate brute force or flooding attacks, and only the necessary ports (like 443 for HTTPS) were left open.

Firewall Policies

Both servers used the Uncomplicated Firewall (UFW) to restrict network traffic. Rules were meticulously applied to ensure that only the reverse proxy could reach the backend server, simulating a typical secure enterprise architecture.

The study demonstrated that a securely configured reverse proxy, coupled with TLS hardening and firewall segmentation, can withstand a variety of common attacks. Rate limiting, strong cipher suite enforcement, and header hardening within the Nginx server all contributed to improved resilience. Importantly, penetration testing revealed that attempts to exploit weak ciphers or simulate MITM and DoS attacks were effectively mitigated by the defensive configurations. Tests confirmed encrypted traffic with tools like Wireshark and OpenSSL, and no plaintext data leakage was observed. Even during simulated DoS conditions, server performance remained stable due to Nginx’s rate limiting and UFW’s network filtering.

Insights and Recommendations

One of the thesis’s key findings is that security is not a one-time task but an ongoing process that must adapt to evolving threats. Regular testing, especially using simulated attacks, proved essential in identifying configuration weaknesses before real attackers can exploit them. Equally important is the principle of network isolation—placing critical components like backend servers in separate, restricted segments reduces the risk of lateral movement if a breach does occur.

The work concludes with a clear message for system administrators and developers: using layered security—TLS, reverse proxies, firewalls, and real-time monitoring—is not just recommended but necessary. Moreover, the use of easily accessible open-source tools like Nginx and Virtuoso demonstrates that robust security does not require expensive enterprise solutions.

By turning theoretical best practices into applied configurations and testing them under simulated threat conditions, this study offers a valuable framework for securing web services in both academic and professional contexts.

Final Thoughts

This research reinforces the idea that web server security is not just a technical concern—it’s a business imperative. Even for smaller organizations or projects, it’s possible to build highly secure infrastructures using well-documented tools and best practices. As cyber threats evolve, so must our defences—and this study serves as a blueprint for anyone aiming to secure the digital front door.

This blog text is based on a thesis conducted by Ahlam Fazlu Rahman and supervised by Antti Virtanen. The thesis can be found at: https://urn.fi/URN:NBN:fi:amk-2025060721416