Preventing wind turbine disasters: the role of AI

Imagine a wind turbine, where the hub is at a height of 150 meters. The turbine and blades are regularly inspected by maintenance personnel to ensure everything is ok. The blades are inspected by flying drones taking photos of them, but to check the hub a person needs to climb the tower. Now imagine that a material defect of initially a microscopic size has, due to vibrations, enlarged and finally causes even more vibrations, eventually leading to that the lubricating oil catches fire, and finally leads to a total collapse of the whole turbine (see Figure 1).

Could this have been prevented? While this scenario is fictional, similar incidents have occurred (see e.g. https://www.nyteknik.se/energi/vindkraftverk-i-lagor-utanfor-ystad-ger-oss-inte-upp-dar/2053905) (Ny Teknik, 2023), and will continue to happen.

While regular preventive maintenance can indeed reduce the risk, wouldn’t it be even more effective if there were an additional “mechanism” that recognized abnormal vibrations and alerted maintenance to take immediate action, perhaps to shut down the turbine and send personnel onsite. This “mechanism” could simply be a few industrial accelerometer sensors – similar as you have in your smartphone or smartwatch – detecting vibrations at strategically chosen locations. The sensors would be connected to an “Edge device”, which might be a single board computer such as a Raspberry PI or a microcontroller. In this context, ‘edge’ simply describes a device that is physically proximate to the sensors and to the equipment or phenomena it is observing, thus there’s only a minimum of delays between observations and possible actions.

Using the edge device, only a small amount of data would need to be transmitted. For instance:

- RED condition = EMERGENCY, shutting down immediately without waiting for maintenance to take actions

- ORANGE condition = severe anomalies detected, shutting down in 5 minutes unless maintenance takes over

- YELLOW condition = anomalies detected, reducing speed in 5 minutes unless maintenance takes over

- GREEN condition = everything is ok

The opposite of edge devices would be the same sensors sending data via cable or satellite connection to the cloud or to a centralized server monitoring all wind turbines in the park, typically causing delays due to the sometimes-huge amount of data being sent. With e.g. 5 sensors per turbine, using a frequency of 200 Hz, and registering vibrations in 3 axes, the data amount would be 5 x 3 * 200 = 3000 bytes per second. True, this might not sound much, but if there are 20 turbines in the park, and a slow (and expensive) satellite connection is used, there will inevitably be some delays.

AI in Educational Setting

This section introduces the definition of TinyML and Edge computing and how AI can play a role in education setting. TinyML refers to the field of Machine Learning (ML) that focuses on deploying and running ML models on resource-constrained devices, typically embedded systems. TinyML encompasses the development and implementation of ML algorithms and models on low-power microcontrollers and other small devices. The goal of TinyML is to bring the power of machine learning to devices with limited computational resources, such as sensors, wearables, IoT devices, and other edge devices. By enabling ML inference directly on these devices, it reduces the need for constant communication with the cloud or remote servers, providing real-time and localized decision-making capabilities (Situnayake & Plunkett, 2023).

How Can You Introduce Edge AI in the Classroom?

Part of the answer is that more and more of our lifes is surrounded by different electronic devices and every one of them is having significantly more capacity than the first moon landers. The devices are getting smarter and smaller, a smartwatch for example might already have over 10 sensors (measuring acceleration, ambient and body temperature, air pressure and altitude, heart rate and heart rate variability, blood pressure, glucose, camera and light, sound, etc.). Especially devices not meant to be connected to the cloud all the time, will need some type of edge capability. Someone needs to be at the forefront, designing new devices with edge capability, and new machine learning models and methods. Many of these persons will have engineering or IT background, and they would benefit from getting introduced to the technology, latest during their bachelor’s or master’s studies.

How do you introduce Edge AI to your students, case study

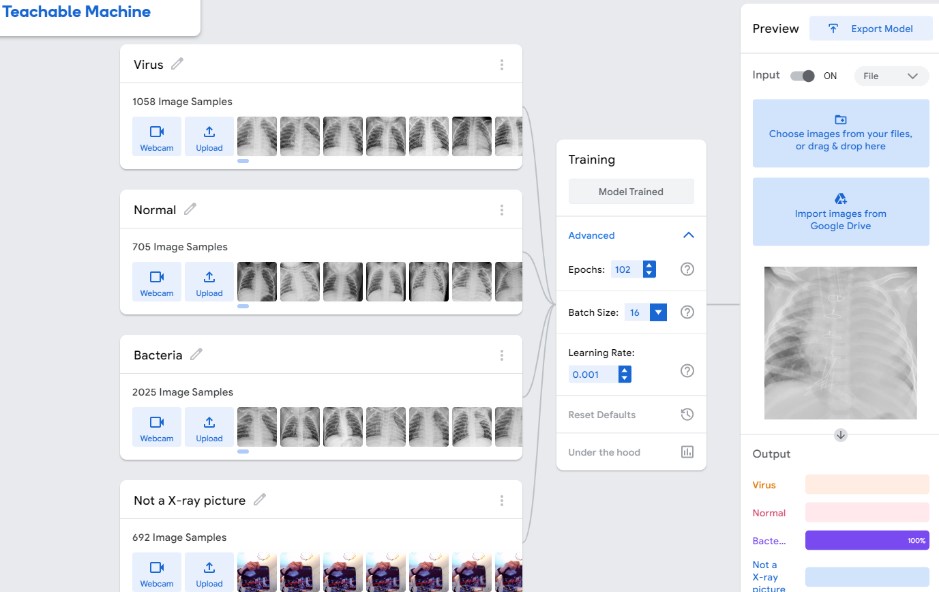

This partially depends on who are in the classroom, children, adults, experts, novices, etc. It also depends on how much time you have available, one hour or perhaps even one week. Nevertheless, most in the classroom will benefit from a hands-on experience, blended with some theory in between. Here I’ll be focusing on students at the university level, but the main aspects will on a high level be same, regardless of age group or expertise level. By using an existing (and in many cases free) AI-platform you don’t need to write a single line of code to get started with AI. In my course, I gave the students a choice of using Google’s Teachable Machine (see Figure 2), Edge Impulse, or some other platform they found.

Teachable Machine is very quick to get started with. Using a web camera, you just need to take a few tens or hundreds of pictures of e.g., two different objects, train the model, and then ask it to classify what it “sees” through the camera. As a demonstration of a more unorthodox usage, I uploaded over 4000 freely available X-ray pictures of normal lungs, lungs with a virus infection, and lungs with a bacterial infection. After training the model for approximately 5 minutes, the model was able to correctly classify X-ray pictures it had not seen before! While this model obviously can’t be used for clinical purposes, it still shows that the AI-technology as such is at reach for complete layman persons and can be tried out for very various purposes.

Edge Impulse

Edge Impulse is both a platform and the name of the company behind it, and it has now become one of the most popular platforms in its area. The learning curve is slightly steeper than with Teachable Machine, and instead of spending 2 – 5 minutes for your first ML model, you will probably spend 10 – 15 minutes. But rather than only being able to use pictures, sound, or poses with Teachable Machine, Edge Impulse supports almost an infinite amount of data types. Basically, any sensor that can send serial data can be used, accelerometers, thermometers, radars, gas sensors, cameras, microphones, etc.

To get started with Edge Impulse you only need to sign up for a free developer account and as device use a smartphone. Connect the phone to Edge Impulse by following the getting started guide and take pictures of a few different objects. After having trained the model, you can deploy it to your phone for testing it live. Once the trained model is downloaded you don’t need an internet connection, everything is running on your phone which has magically transformed into an edge device.

Edge Impulse is my go-to AI-platform when it comes to machine learning. I have also written several tutorials covering how I have used it for numerous purposes and with different devices. One of them (https://edge-impulse.gitbook.io/experts/image-projects/silabs-xg24-card-sorting-and-robotics-2) shows how I have used the platform for machine vision purposes, sorting playing cards and solid waste with the help of a robot arm. This project essentially combined three different disciplines in the course I’ve been teaching: programming in Python, machine vision using TinyML on an edge device, and robotics.

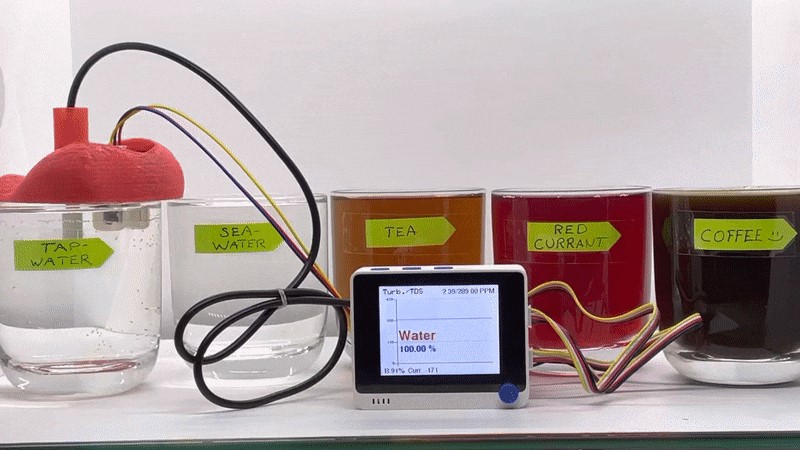

Another tutorial (https://edge-impulse.gitbook.io/experts/novel-sensor-projects/liquid-classification-seeed-wio-terminal) covers how I’ve created an artificial “tongue” by using two liquid sensors and an embedded device. This contraption is able to correctly classify the five different liquids I trained it on (see Figure 4).

Students view about learning AI with a hands-on perspective

As mentioned earlier, I’ve been teaching a computer science course which consisted of learning basics of Python programming and fundamentals of AI, this was the first time I myself taught AI. Five different classes from the School of Technology participated, a total of 126 students. Their major studies are environmental, energy, or machine construction related.

Students were tasked with completing two distinct AI projects using either Teachable Machine, Edge Impulse, or any other platform of their choice. They were also required to draft a report on their findings, and verbally present their projects at the end of the course. Most surprising for me as a teacher, was the innovativeness the students showed! One student is an expert in different rock materials and crystals and used AI to classify different rocks. Another is a musician – playing violin and cello – and used AI to classify different string techniques. A third used AI to classify different cultural landscapes from an environmental perspective, another classified fishes, another birds, dance poses, etc, etc.

Overall, the feedback from the students was very positive, most did before the course not know much about AI, but after the course they had a much clearer picture of the benefits and risks with AI. Gladly many also thought about the ethical side of AI, how it can be misused or how an insufficiently tested model can create havoc. As the AI-part was literally a hands-on exercise – using smartphones, cameras, real objects, etc. – it gave them a break from the normal lectures, which according to them was seen as a big plus and also helped them to learn better. The theory part was by design kept to a minimum, those who got inspired will find tons of excellent education material on the web to learn more about what’s behind the scenes.

The primary improvement feedback was that the AI segment should have been given more emphasis during the course, with a reduced focus on Python programming. This is actually in line with what I myself had reflected about during the course, let’s see what and how new students will learn in the upcoming courses.